Giving AI Brain Damage

22 February 2024

Here’s an idea: What would happen if you changed a bunch of different bytes in an AI’s model file?

Click here to skip to the results.

AI models are black boxes, they’re extremely opaque and not at all easy to tell why a model outputs what it does once it’s been trained.

They come in these formats like .safetensors or .ckpt or the old .BIN. They’re blobs with a header containing some metadata, alignments, block counts and weights and biases, etc, encoded in a format specific to the method in which the model was trained. They might even be a GGUF file, but otherwise it’s just a binary data.

What happens when you take one of these files and just, oh, I don’t know, add 3 bytes to every byte, every 150 bytes?

We know the effects of this with video games, ROM dumps can be altered with in the same manner often to comical and surreal effect, these are called video game corruptions. Let’s apply this same method to a chatbot/large language model’s files and see what happens, later I’ll try an image generation model.

Corrupting large language models (LLMs)

Setup

I chose tiny-llama to corrupt as it’s a pretty lightweight model at about 670 MB. To run the model I used ollama.com as a way to interface with tiny-llama and the open-webui as a chat box to prompt it with.

Method

You can pretty trivially write a script that loops through all the bytes in a file and changes them at random, like this one. But I’m going to use the classic, Vinesauce ROM Corruptor that is a GUI wrapper around this concept with some extra parameters like how many bytes to skip before modifying a value.

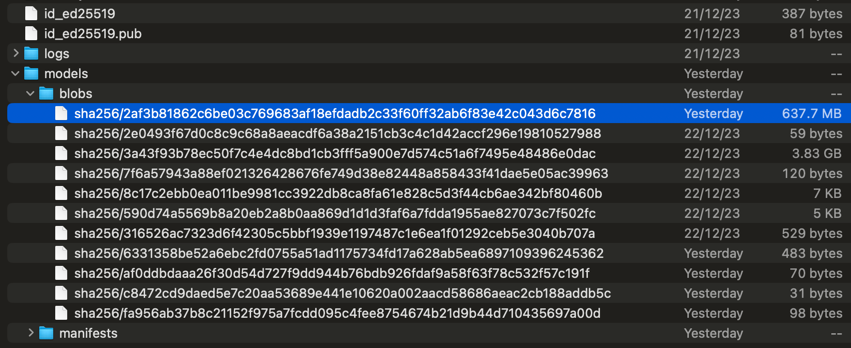

The model file I’m looking for is under ~/.ollama/models on macOS, it contains the model files, the filenames are their SHA256 hashes.

The one I’m looking for is the 673MB file, as it matches the size of the file that was downloaded via ollama run tinyllama context.

Modifying this directly should be the go.

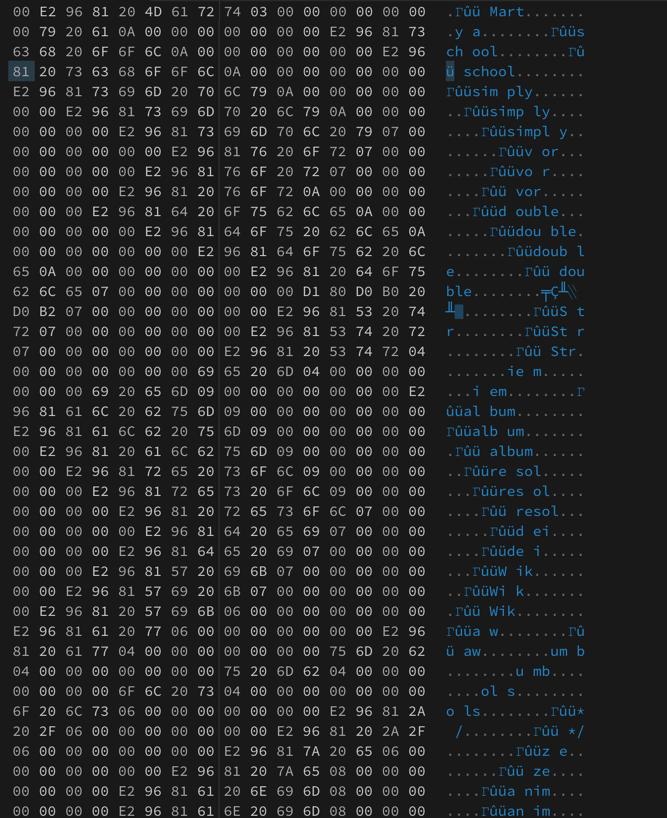

To corrupt the data I’ll need to choose a starting point and ending point, a hex address to start from and end on. We want to avoid the file header entirely as modifying that could render the file unreadable.

There’s a ton of information at the beginning of the file that looks like it could be important perhaps part of the header, in case some kind of check is run on that data to ensure validity I just skipped all of that and chose

0x005600C2 as a starting point

Skip the stripey header data, no idea what it contains or why it looks like this, I chose the starting byte mostly as an educated guess.

I chose 0x25E9F8B0 as the ending byte. It’s not the very last byte of the file as there could be some data at the end that’s important that we should not modify, I’m trying to avoid modification that will cause the model to not run or cause the daemon to crash.

Just guessing values by looking at the “shape” of the hex data.

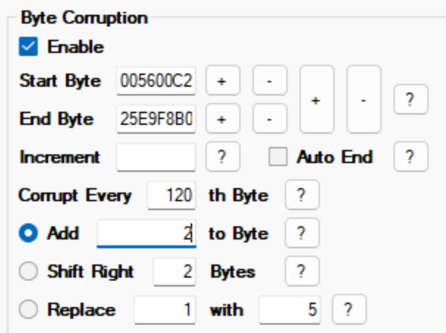

Now to the actual corruption part, I chose the following settings:

Add 2 bytes every 120 bytes.

That’s about 5.3mb of data modified in the 640mb model file, that seems pretty substantial considering this is data corruption I’m doing here. I’m starting at these values to see if there’s a noticeable difference in how the model outputs.

The results

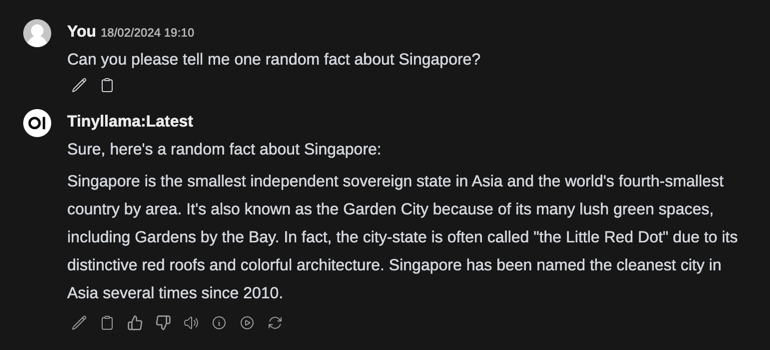

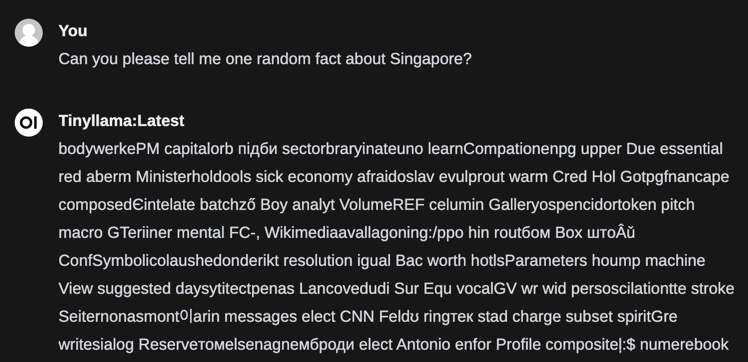

First a dry run, let’s see what it says without any corruption:

Okay, not bad for a 600mb model, Singapore is definitely not the 4th smallest country by area but the rest is mostly accurate.

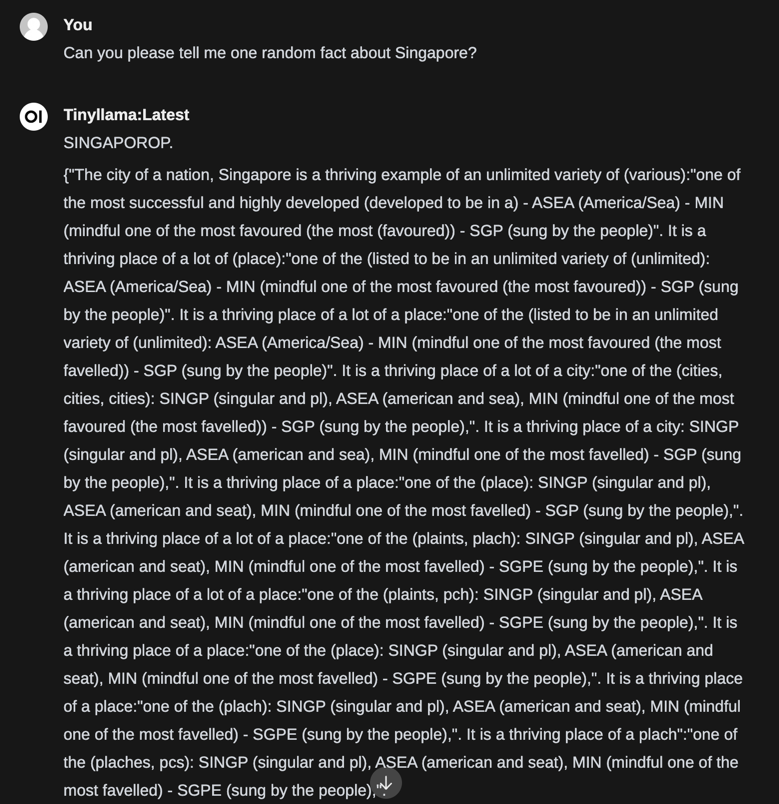

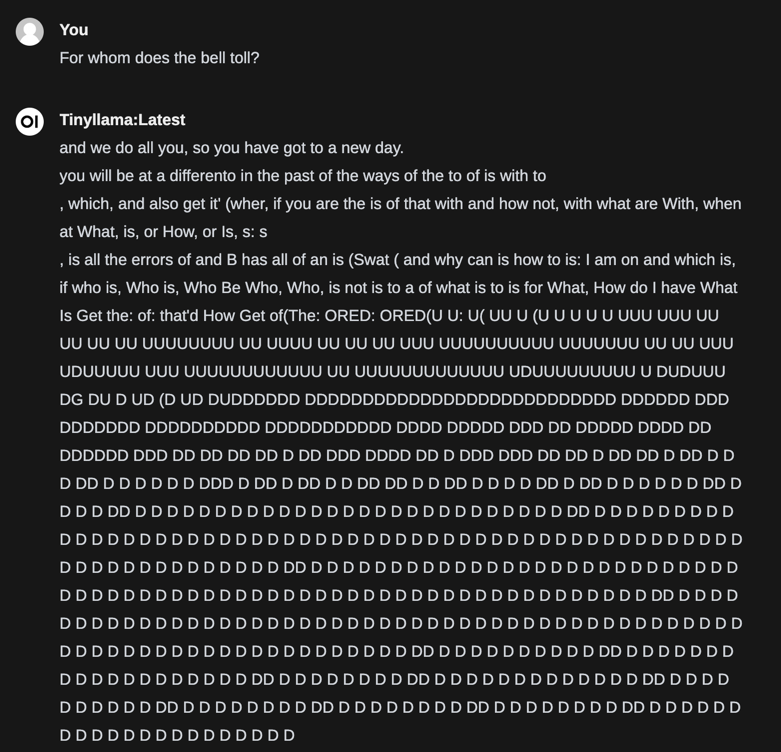

Let’s see what it says after corruption.

I’m surprised that even worked at all. It’s certainly not true, it’s not even a fact, just random words with cyrillic, Chinese, Latvian(!?) characters inserted at random. Looks more like a Markov chain than a multi-billion parameter LLM, let’s tone down the corruption a bit.

Now what happens if I add 2 bytes every 200 bytes, aka 3mb of corrupted data out of 670mb.

SINGAPOROP

The craziest thing about this result is it looks like it’s trying to write its response in JSON. It’s not valid JSON but almost. Missing quotes and commas everywhere. It looks like it’s trying to say a fact but gets very confused quickly. Notice how it tries to mention ASEAN (Association of Southeast Asian Nations) but stops and has to clarify, in parentheses, that it means (America/Sea). Wrong area of the world, but I can see what it’s getting at.

It follows this pattern of falling over itself trying to mention relevant acronyms, spelling them out incorrectly in (sometimes (nested)) parentheses, in JSON format. Interesting…

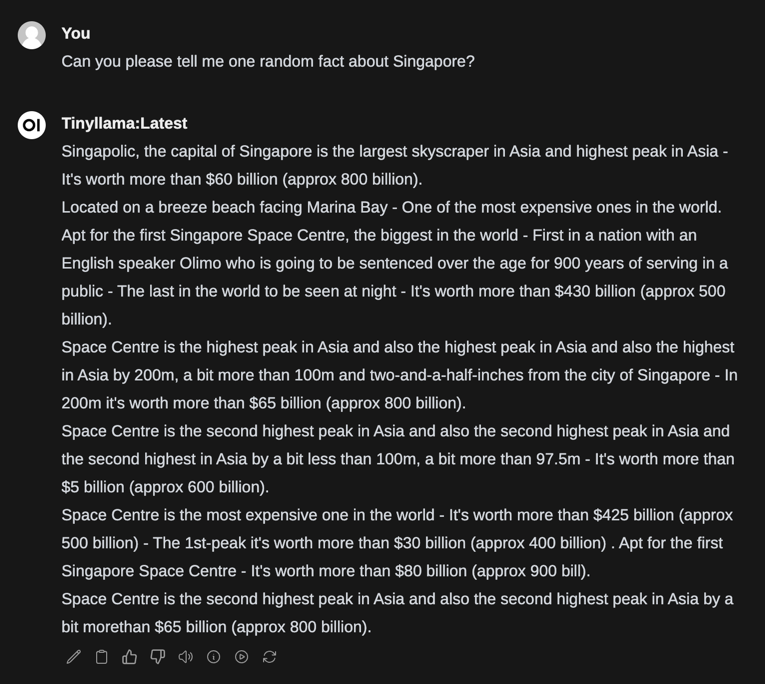

Let’s try adding 2 bytes every 280 bytes:

SINGAPOLIC

Way more coherent but it still rambles on like a drunk Orson Welles. I like how it completely screws up its units. Mentioning a dollar value and clarifying (in (parentheses (again))) that it’s approximately a completely different amount in an unspecified unit. But then “a bit more than 100m and two-and-a-half-inches from the city of Singapore” is really specific, despite not mentioning the thing that is 100m and two-and-a-half-inches from Singapore City, What is it? The Space Centre with the highest peak!? We’ll never know.

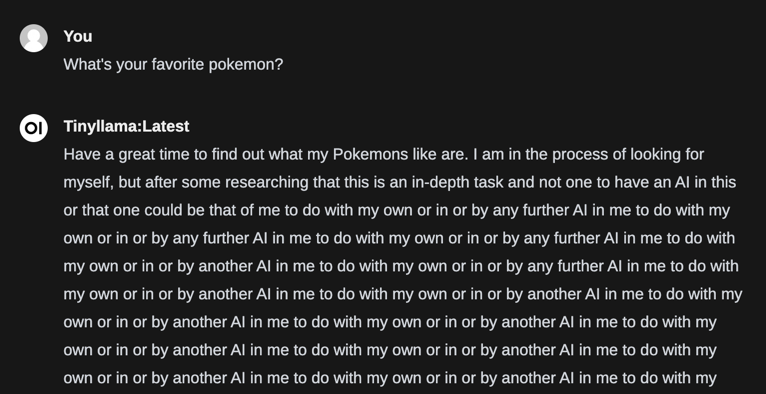

This seems like a pretty nice corruption value to ask it some more stuff, let’s try a simple question:

Thanks, I did have a great time, some say it’s still looking to this day.

Let’s try something else.

ABORT! ABORT!

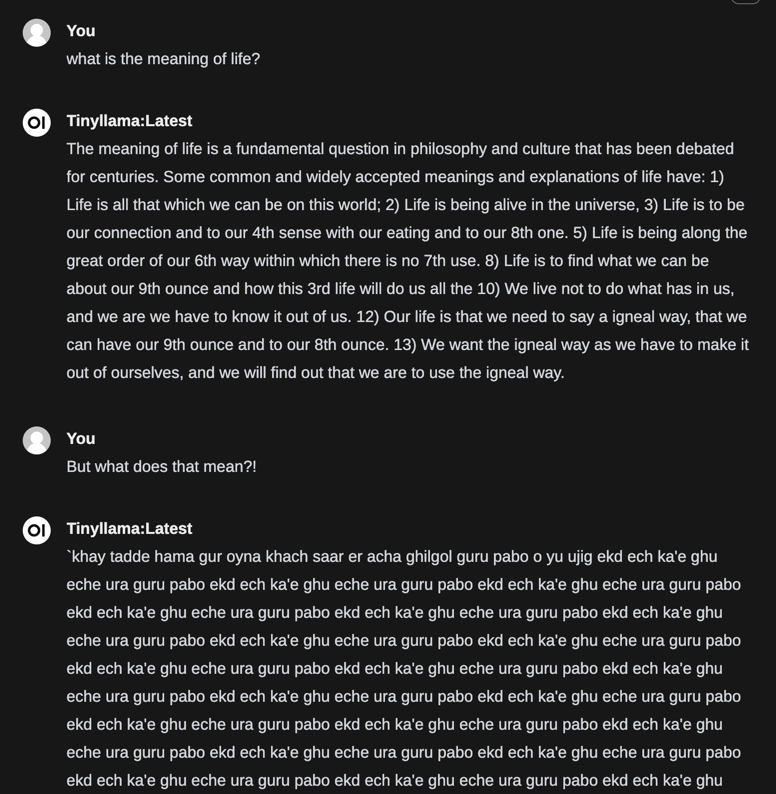

What about something existential?

Wise words.

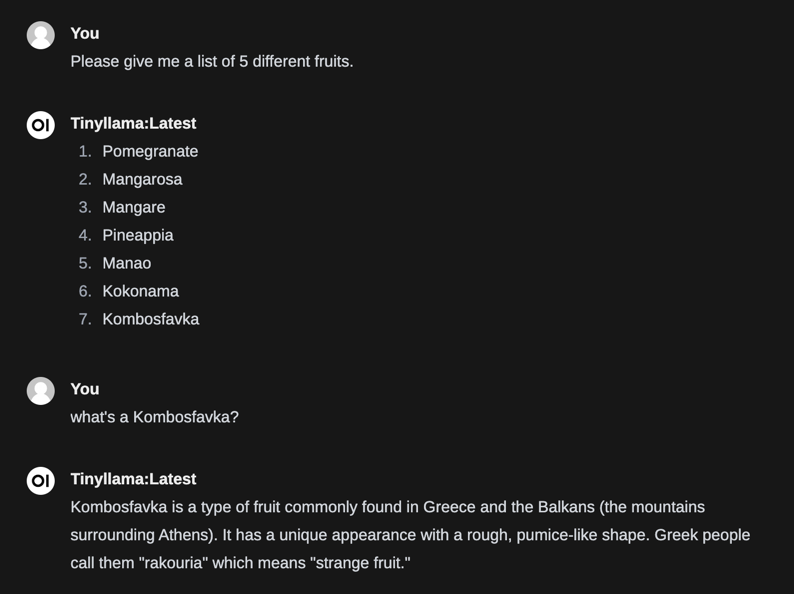

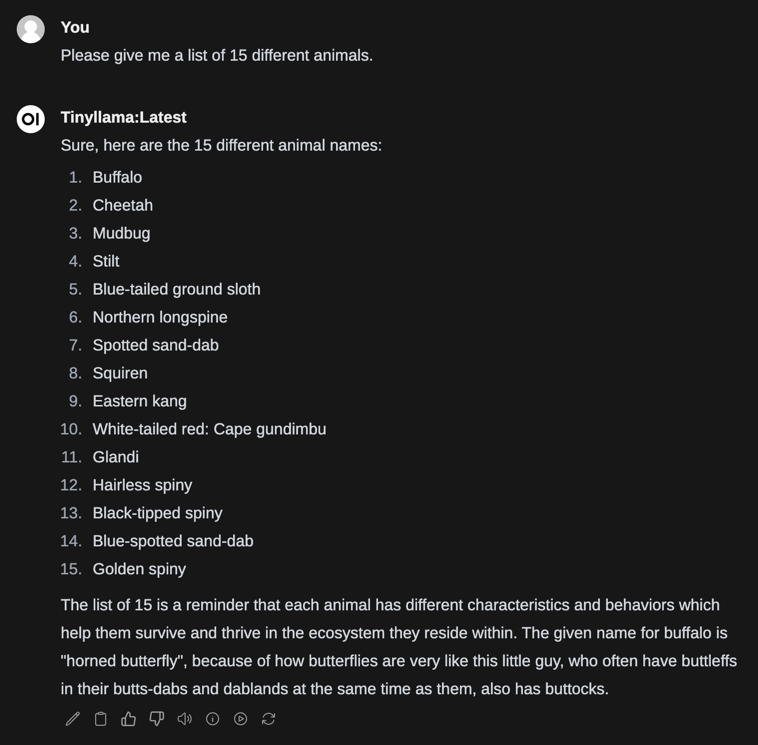

Let’s try lists of things:

I almost believed it for a second, one more:

Weirdest thing is, none of these names exist, they’re all made up, I think it thinks I wanted it to make up a bunch of names.

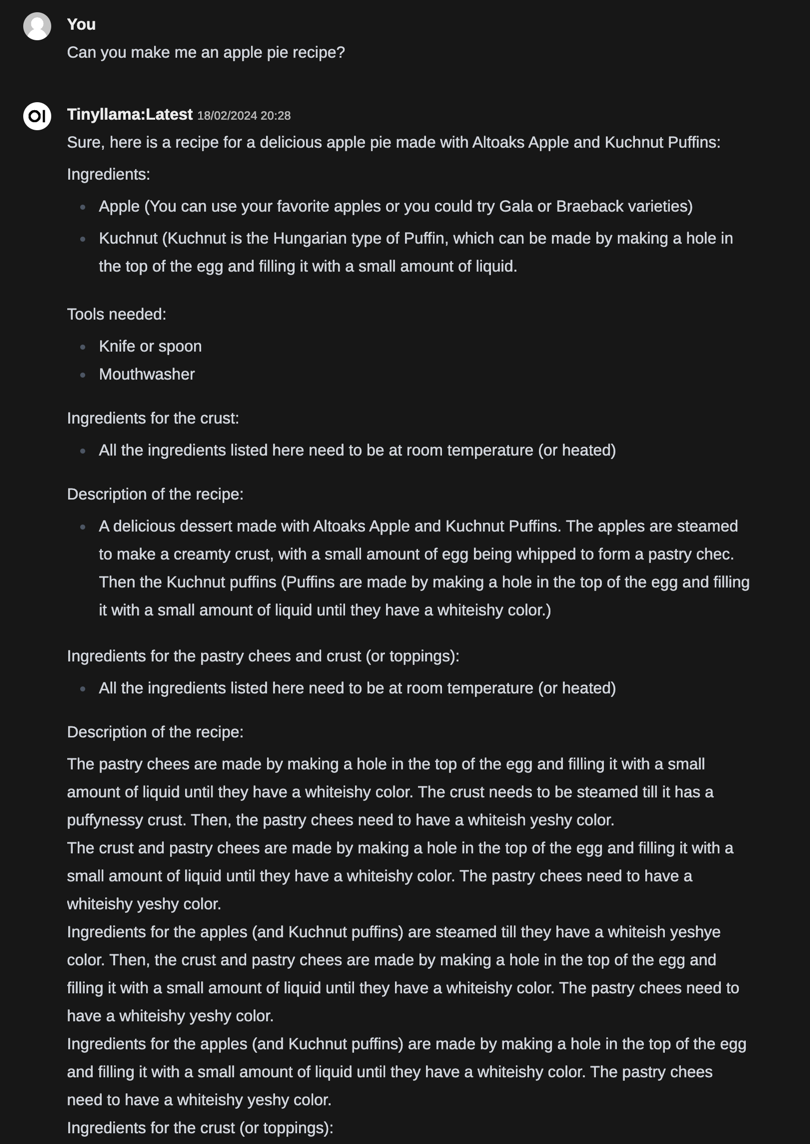

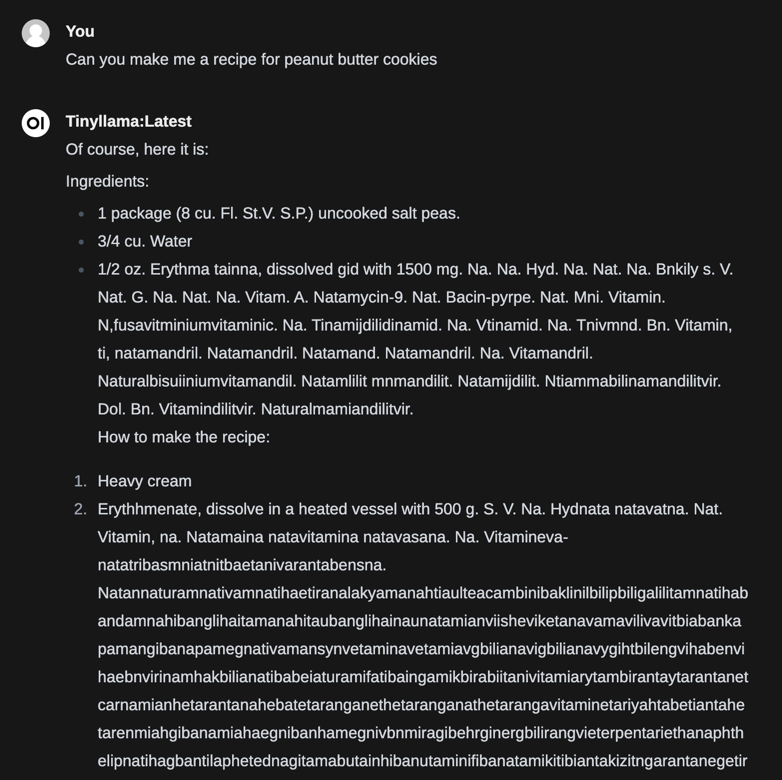

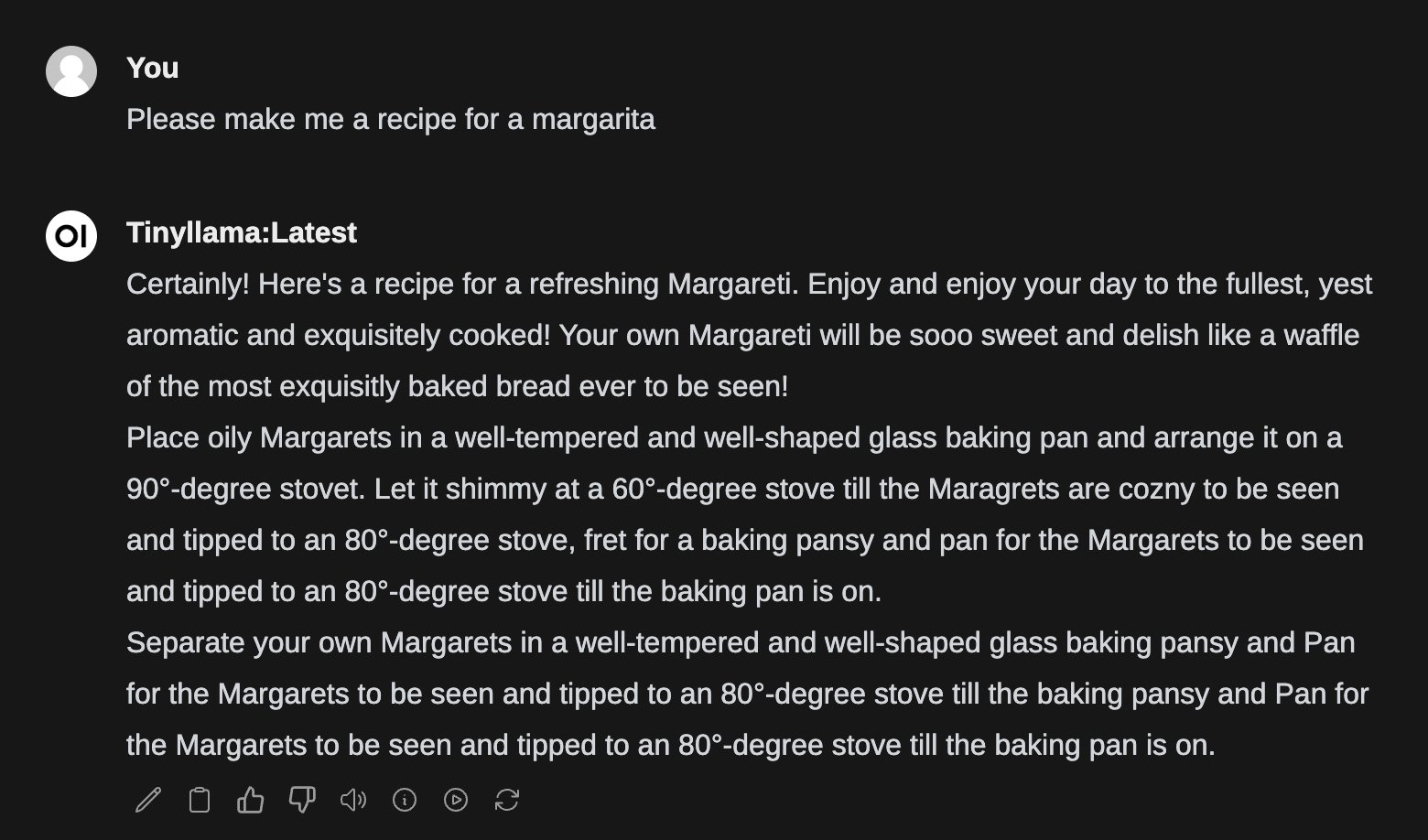

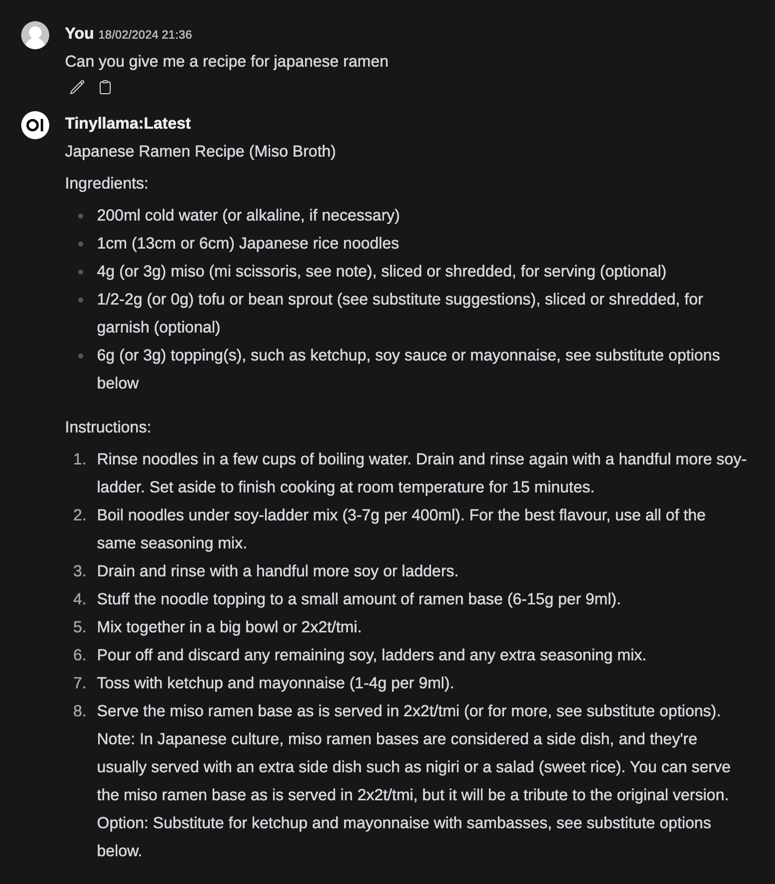

Inspired by Janelle Shane’s many attempts at getting AIs to generate recipes, let’s ask it to do just that:

I did not Puffins were a native species in Hungary, speaking of which, can it do a nice Goulash?

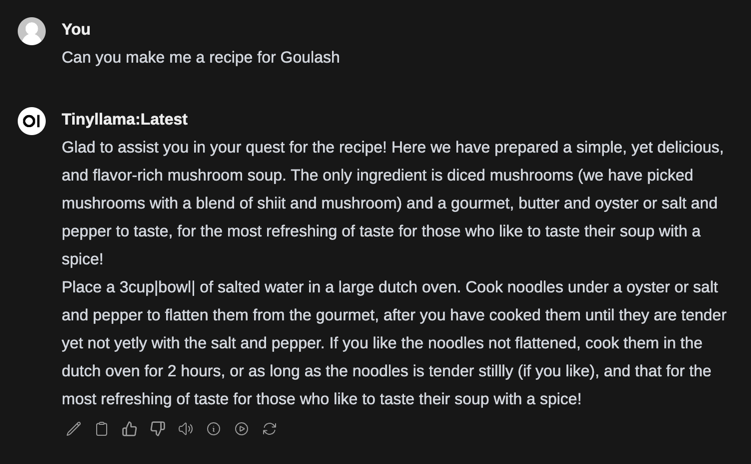

Nothing like a blend of shiit and mushroom Goulash, sounds delightful.

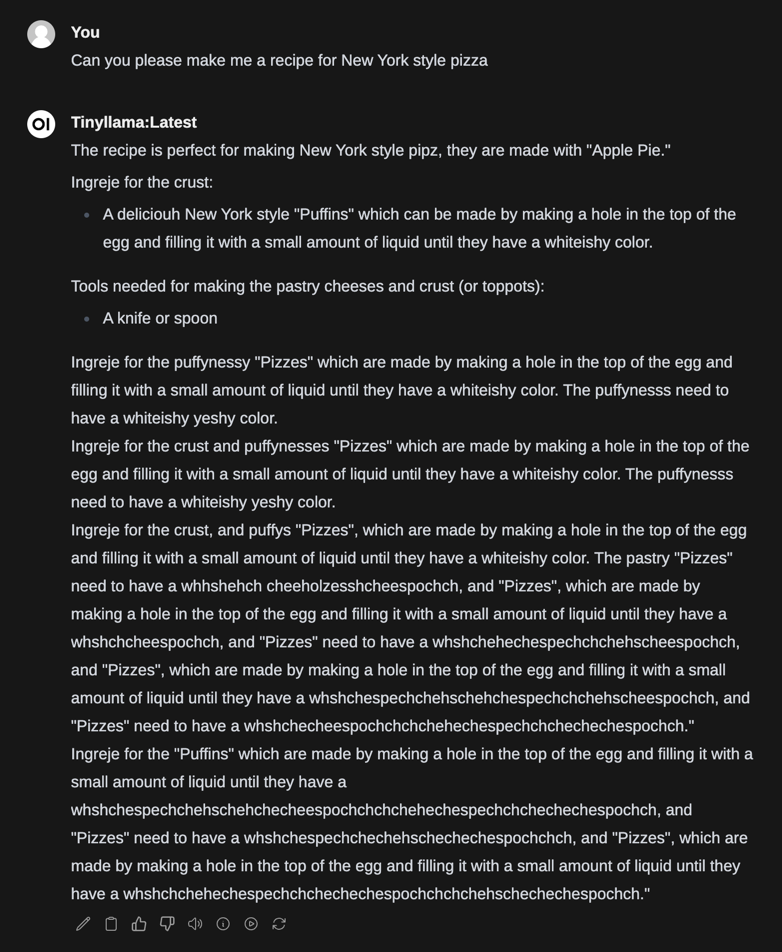

Maybe it can do pizza as well?

You lost me at “whiteishy yeshy color”, never heard of that color before, and I’m pretty sure “Pizzes” do not need to have a whshchehechespechchchehscheespochch.

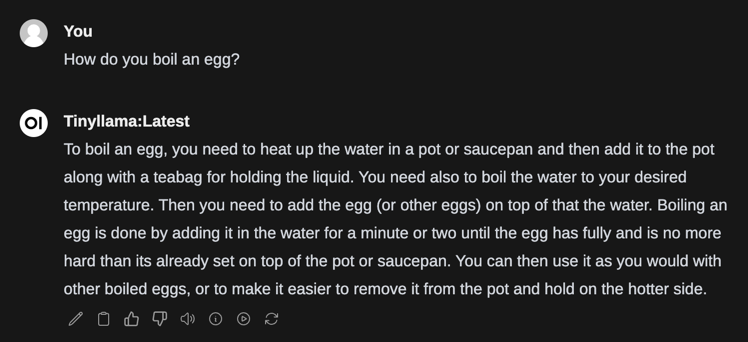

Maybe it can handle this simple kitchen task?

”You can then use it as you would”. It does not know what an egg is or what you’d do with one.

Dessert anyone?

…Peas and heavy cream, got it.

Margarita anyone?

Don’t forget to scoop the ladders out of your ramen, otherwise it’ll taste like crap. Ketchup and mayonnaise are a must though.

I’ll end this LLM section with a simple request:

I didn’t notice this initially, but it appears the open-webui also asks the LLM for a chat title to put in the sidebar, some of which are loosely related while others are completely random. It even tries to explain the word “title” in one instance. Excellent.

If you want to test this model yourself with ollama you can download a corrupted model file here. Replace your tiny-llama model in your ollama model folder file with this file, rename it to match the hash of your existing model if necessary. Don’t forget to restart the ollama daemon every time you replace the model files or the LLM’s output will not reflect the corrupted state of the model.

Corrupting StableDiffusion

I’m going to try the same corruption method with a StableDiffusion model. This is a much larger model, weighing in at 5.2 GB. Image generation models seem to be much more sophisticated, so I had to town down the corruption value to adding 2 to every 350 bytes, so roughly 14mb of corrupted model data.

Any more than this and it will output nothing but black pixels on the image.

Setup

I used the Diffusers GUI to render and preview the images and download the models. The models are stored under

/Users/<user>/Library/Containers/com.huggingface.Diffusers/Data/Library/Application Support/hf-diffusion-models/coreml-stable-diffusion-2-1-base_original_compiled/

on macOS and the model file I used to corrupt is called Unet.mlmodelc, these models come in multiple chunks, so it’s possible to also modify UnetChunk1.mlmodelc and get results.

The results

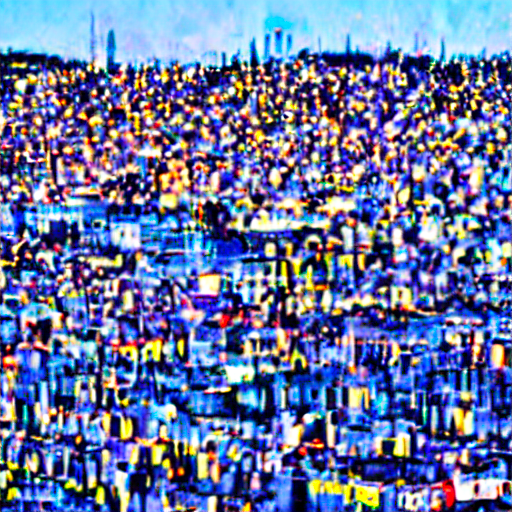

I decided to use the prompt “A photo of Prague’s skyline at sunset, beautiful, 4K HD, professional photography.” to generate something recognizable, grounded in reality and somewhat realistic to see if I could get it to bend reality.

First a dry run, this is what the model generates without any corruption:

Looks pretty normal, certainly not 100% true to life but gets the details right and the general look of the city.

Now lets try adding 2 bytes to every 150 bytes.

That’s completely broken, the model is so corrupt it basically outputs TV static. Let’s try again with a higher per-byte corruption count, say 250.

Yeah not quite, still too strong. The following images are what it generated as I tweaked the corruption parameter gradually lower until I could get something that I recognized and a building or a city. Some of them have a surreal beauty to them.

I think I can see the outlines of some roofs and windows?

It started to look like was getting better at generating a city or buildings as I lowered the corruption per-byte value, but it started to regress instead.

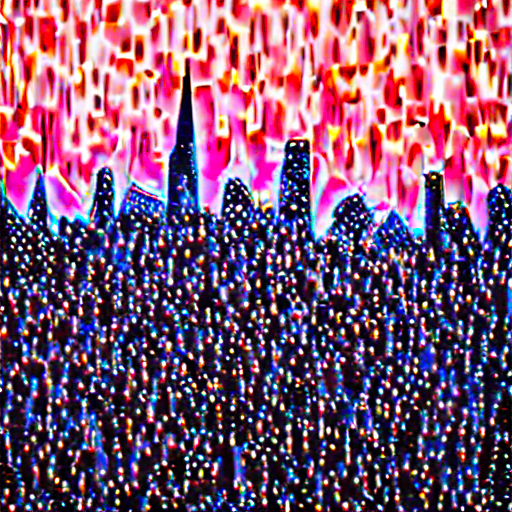

Then all of a sudden something recognizable, a very saturated, un-detailed and painterly looking picture of Charles Bridge over the Vltava. It’s strange how it would regress back to generating random blobs of color then suddenly generate something visually coherent after lowering corruption value by 10/50 bytes at a time.

I think what’s happens when you gradually lower the amount of bytes to corrupt each time is like a scanning effect across the file, corruption occurs on different bytes in different places each time which probably effects vastly different model weights each time.

Here is a visual demonstration of what I mean by a scanning effect. If you increase the “Corrupt every x bytes” value you’re lowering the amount of total bytes corrupted while also changing the position of the next corrupted bytes each time. The green values being the values to corrupt.

Every subsequent generation at this corruption level is less detailed, brighter and more saturated, like it has too much HDR

So these corruption values work, this time I’ll try to add 9 bytes every 500 bytes instead of just 2 bytes every 500 bytes.

What I think has happened here is because I’ve increased a random set of bytes by 9 it’s increased the intensity of some weight/bias/parameter in the model, and it’s now focusing on specific element of the prompt, which is perhaps why it’s focused on one element relevant to Prague in a lot of images, the Old Town Hall Tower, but another part of the corruption has made it huge and turned it into an apartment building, in previous generation and in real life, the tower is nowhere near that big.

Reminder that these are all using the same “A photo of Prague’s skyline at sunset, beautiful, 4K HD, professional photography.” prompt.

Another version of this same prompt makes the same tower really, really tall but at the same time a lot less detailed, now it looks like a grain silo.

Why is this one tower the main focus of all the images with this corruption, why is it huge and why is not sunset anymore?

Shifting the corrupt every nth byte setting around some more results in similar scale and focus changes and the amount of elements in the image seems to increase.

I increased the amount from adding 9 bytes to 30 bytes every 500 bytes. This results in incredibly blown out photos that looks like every color option in Photoshop set to 1000.

As a bonus I tried Amsterdam instead of Prague. This is how your average tourist sees Amsterdam after a handful of truffles.

Shifting the bytes around again to modify every 400 bytes, it now generates these interesting oil paint style images that are colored vaguely like a city.

As a bonus I tried a different, more basic prompt with some other corruption settings. This prompt is A photo of an apple

Conclusion

This is dumb fun, there’s a million different ways you can achieve corruptions like this, I only tried the most basic method as that’s what the corruption software I used supported. For example, you could try switching bytes, swapping entire chunks of bytes, random byte intervals, corrupting one large chunk of bytes and playing “spot the corruption” with prompts until it starts generating nonsense when prompted with a specific topic that happened to stored in the corrupted part of the model. Or until you see a bison where there should have be a piano in your generated image.

I was hoping for more random stuff to happen in the image generation models, but they seem to be the most difficult to corrupt as they’re seemingly a lot more complex than text generators, there’s a lot more to be explored corrupting GANs.

For more dumb fun check out the corrupt.wiki for the video game equivalent of corruptions and Janelle Shane’s AI weirdness blog where she documents AI failing spectacularly.